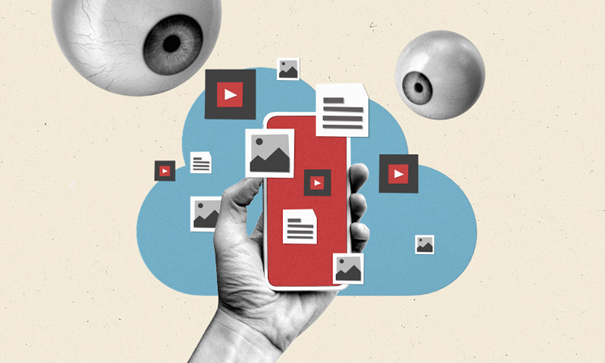

Every day, Europeans send billions of messages through social media applications, online gaming sites and other messaging platforms. While the vast majority reflect personal or professional matters, some include illegal material or are linked to criminal activities or the intent to commit them.

Indeed, some messages or uploads dwell in the darkest corners of the digital sphere, implicating senders and recipients in one of society’s most heinous crimes: sexual abuse against minors.

In 2020, in the midst of the COVID pandemic, top EU officials started developing a legislative proposal aimed at tackling the scourge by identifying, reporting and removing child sexual abuse material (known as CSAM) from the online world — and therefore bolstering the protection of minors.

While few question the importance of this goal, the law proposal — launched by EU Commissioner Ylva Johansson in 2022 — sparked heated debate in Brussels and in several European capital cities.

Critical voices within parliaments, the academy and civil society pointed to the enormous risk to the privacy of European citizens and the security of internet systems. They also stressed the limited effectiveness of the solutions proposed.

🙏thank you @giacomo_zando @Balkanizator & @LudekStavinoha for revealing the EU law enforcement & AI company mass surveillance aspirations animating the EU push to scan everyone’s private messages. It’s critical that people understand what’s going on herehttps://t.co/ZU7OHLVJXs

— Meredith Whittaker (@mer__edith) September 29, 2023

Web of interests

After nine months of digging, this cross-border investigation unveiled key details on a web of interests and groups supporting the legislation, which was still being discussed at the time of publication.

Beyond the push to introduce a legal base for mandatory “client-side scanning” — a way to monitor interpersonal communications in search of images depicting abuse —there’s a push by powerful law enforcement agencies and entrepreneurs-turned-philanthropists who have high stakes in the growing market for artificial intelligence technologies.

Thanks to several freedom-of-information requests, leaks of documents and access to insiders and several sources, the journalists disclosed elements that help map the close connections and money trails between top EU officials working on this file, and some of the most prominent interest groups.

Among them is Thorn, a US-based organisation that develops and sells technology that uses AI to scan and “cleanse” the internet. Thorn was founded by Hollywood star Ashton Kutcher, who became the most recognisable face of a carefully orchestrated and well-funded campaign in support of the proposal.

🔴 Minutes from the @Europol commission, obtained by #BIRN, show that the agency requested unlimited access to the data that would be obtained under a proposed new scanning system for detecting #CSAM on messaging apps, and that no boundaries be set on how this data is used.

— Balkan Insight (@BalkanInsight) September 29, 2023

The investigation shows that the European Commission proposal risks severely undermining end-to-end encrypted messaging, which is considered the most effective way to secure communications, including for journalists and activists at risk of being monitored by both governmental and private entities.

Evidence collected by the journalists also points to real risks that the costly data infrastructure foreseen by the proposal could be abused by security agencies to search for information other than CSAM, and fine-tune their AI systems.

The team

The freelance journalists who collaborated on the investigation were Ludek Stavinoha (Czech Republic/United Kingdom), Giacomo Zandonini (Italy) and Apostolis Fotiadis (Greece).

See the stories below.